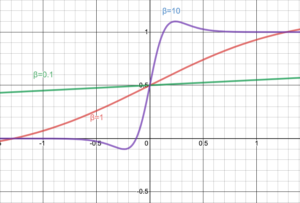

In terms of batch size, the performance of both activation functions decreases as batch size increases, potentially due to sharp minima. In very deep networks, Swish achieves higher test accuracy than ReLU. However, Swish outperforms ReLU by a large margin in the range between 40 and 50 layers when optimization becomes difficult. With the MNIST data set, when Swish and ReLU are compared, both activation functions achieve similar performances up to 40 layers. The simplicity of Swish and its similarity to ReLU make it easy for practitioners to replace ReLUs with Swish units in any neural network. Replacing ReLUs with Swish units improves top-1 classification accuracy on ImageNet by 0.9% for Mobile NASNetA and 0.6% for Inception-ResNet-v2. Swish tends to work better than ReLU on deeper models across a number of challenging data sets. If β gets closer to ∞, then the function looks like ReLU. β must be different than 0, otherwise, it becomes a linear function. Here, β is a parameter that can be learned during training. Β value can greatly influence the shape of the curve and the accuracy of the model and the training time. Interestingly, the same authors of this paper, published another paper after a week, where they modified the swish function by introducing a multiplier β with the sigmoid, and they call this function “Swish Again”.

Swish can partially handle, the negative input problem. The function is formulated as x times sigmoid x. Where σ(x) is the usual sigmoid function. The mathematically Swish is defined as: y= f(x) = x.σ(x) But unlike ReLU however it is differentiable at all points. The shape of the Swish Activation Function looks similar to ReLU, for being unbounded above 0 and bounded below it.

.jpg)

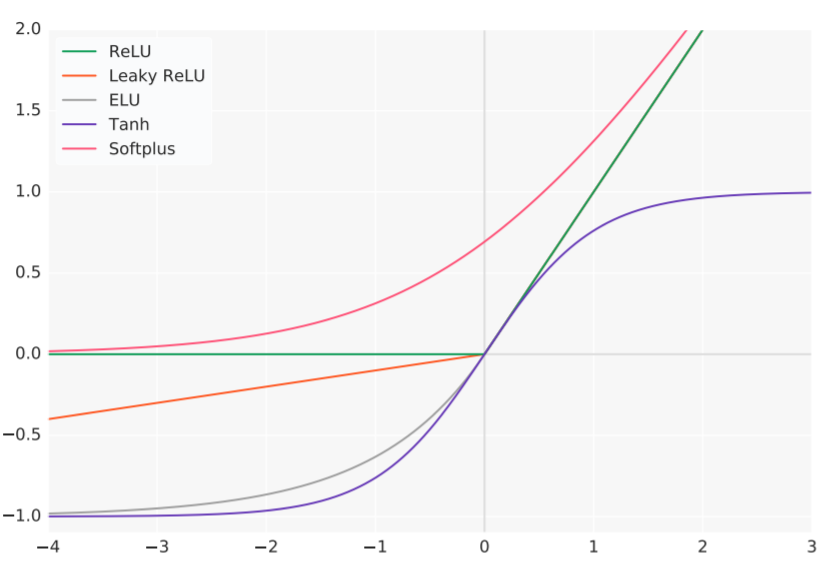

Swish Activation Function is continuous at all points. Swish is a continuous, non-monotonic function that outperforms ReLU in terms of the “Dying ReLU problem”. Various substitutes like Leaky ReLU or SELU (Self-Normalizing Neural Networks) have unsuccessfully tried to devoid it of this issue but now there seems to be a revolution for good.

#Swish activation function update

Because we use Gradient descent as our parameter update algorithm, if the parameter itself is 0, then the gradient of the parameter will be 0 and that parameter will never be updated as it just assigns the parameter back to itself, leading close to 40% Dead Neurons in the Neural network environment when θ=θ. With ReLU, the consistent problem is that its derivative is 0 for half of the values of the input x in the function. Yup, that is it! Simple making sure the value returned doesn’t go below 0. There are many activation functions like Sigmoid, Tanh, SoftMax, ReLu, Softplus.Ĭurrently, the most common and the most successful activation functions ReLU, which is f(x)=max(0,x). Thus it bounds the value of the net input. They basically decide whether a neuron should be activated or not. Thus the activation function is an important part of an artificial neural network. And typically these activation functions are some mathematical equations, that determine the output of a neural network model. As gatekeepers, they affect what data gets through to the next layer if any data at all is allowed to pass them. You can think of activation functions, as little gatekeepers for the layers of your model. The authors of the research paper first proposing the Swish Activation Function found that it outperforms ReLU and its variants such as Parameterized ReLU(PReLU), Leaky ReLU(LReLU), Softplus, Exponential Linear Unit(ELU), Scaled Exponential Linear Unit(SELU) and Gaussian Error Linear Units(GELU) on a variety of datasets such as the ImageNet and CIFAR Dataset when applied to pre-trained models.īut, before jumping into the working of this activation function, let's have a recap of activation functions. Swish is one of the new activation functions which was first proposed in 2017 by Google Brain team using a combination of exhaustive and reinforcement learning-based search. The choice of activation function in Deep Neural Networks has a significant impact on the training dynamics and task performance and can greatly influence the accuracy and training time of a model.

0 kommentar(er)

0 kommentar(er)